Cognitive Bias, AI, and the Brain: Why Ethical AI Needs Neuroscience- Issue #24

Community Edition

Your Brain Has Bugs, and So Does Your Algorithm

If you’ve watched Inside Out, you will find it easy to imagine your brain as a chaotic group chat. There’s Anxiety constantly yelling worst-case scenarios, Joy sharing memes to cope, Nostalgia dropping memoirs from 2016, and Anger waiting to unleash its ‘forbidden-in-polite-settings’ vocabulary. And they all have to vote on a decision. That’s what we call a cognitive bias. The way your brain makes decisions might not always be the rational thing to do, because you already lean towards your subjective reality.

Bias isn’t always a bad thing. It’s just your brain’s way of saving energy. It hates uncertainty. So, it uses shortcuts (called heuristics) to make decisions quickly. Unfortunately, these shortcuts often trip us up, in ways we wouldn’t want to admit, like-

Confirmation Bias: You’ve already decided pineapple doesn’t belong on pizza, so every article, reel, or meme you see becomes “proof”. Even if Gordon Ramsay himself disagrees.

Availability Bias: You heard of the consecutive plane crashes on the news? Suddenly, flying feels riskier than driving. Even if the statistics say otherwise. Your brain mistakes recent for frequent.

Anchoring: You see a hoodie priced at ₹4999, then discounted to ₹1999. Feels like a steal, right? That’s anchoring. The first number sets the bar.

Negativity Bias: You got 20 comments. 19 were positive. Guess which one kept you up at 3 am?

These glitches aren’t rare. They’re default settings. We get by with them just fine.

But guess what? When we build AI systems, these same biases sneak into the codebase.

If you had to train an AI on every decision you've ever made when you were hangry, overthinking, or sleep-deprived, would you still trust it to run your city’s healthcare? Nope? But that’s the reality.

AI doesn’t emerge from some pure mathematical utopia. It’s born from data. And that data? It’s us. Messy, biased, emotional us.

Some real-world overlaps we see:-

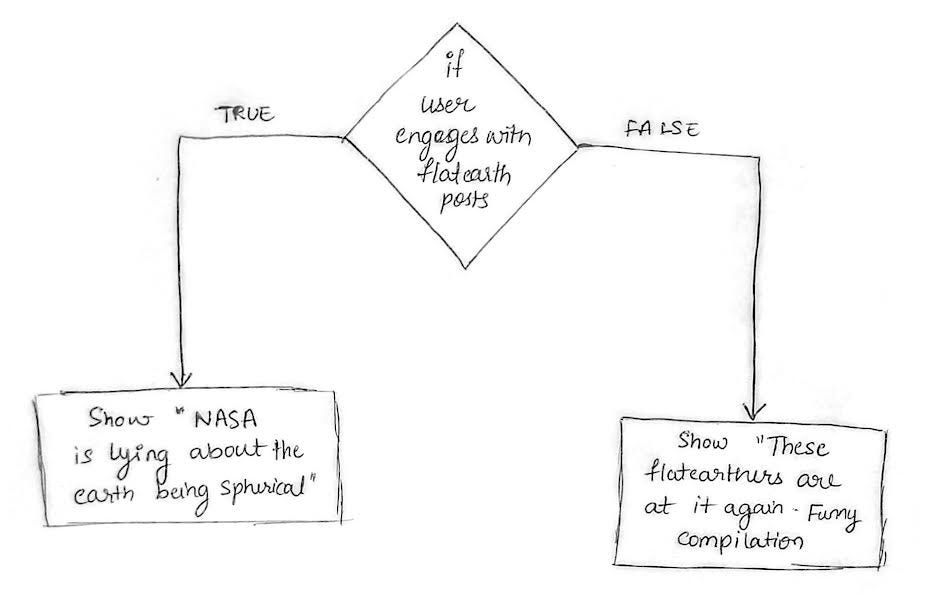

Confirmation Bias in Echo Chambers: Recommender algorithms feed you what you already agree with. Flat Earthers and conspiracy theorists don’t fall into the rabbit hole by accident!

Availability Bias in Overrepresentation of Rare Events: Algorithms might flag people as high-risk just because certain crimes made headlines recently, marginalizing certain social groups.

Anchoring in Biased Benchmarks: Resume-screening AIs might prioritize Ivy League names over actual skills. Data anchored to brand names results in AI anchored to brand names.

Negativity Bias in Toxic Content Wins: Platforms often reward rage-bait because it spikes engagement. AI reads that as "quality".

AI doesn't invent these patterns. It copies and scales them at warp speed. AI bias is a mirror. We think we’re building something smarter than us. In reality, we’re just digitizing our blind spots. The result? Machines that aren’t malicious but are indifferent in the exact ways we’ve taught them to be.

In humans, implicit bias is shaped by exposure, emotion, memory, and social learning.

In machines, inductive bias is what we bake into the algorithm: which features to prioritize, which datasets to use, and what loss functions to minimize.

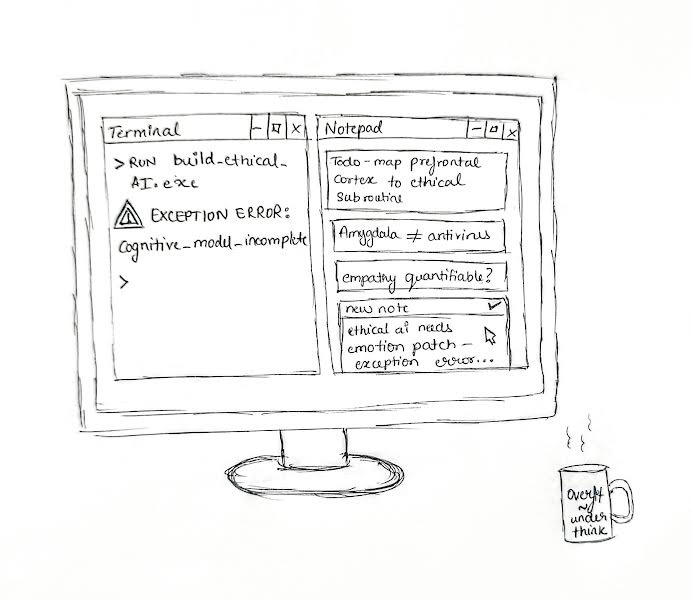

But both are shaped by humans. And both make decisions under uncertainty. We built systems in our image without even knowing what that image is. And that is where neuroscience comes into play.

The bottom line? The brain is not a perfectly logical machine. It's a chaotic, deeply flawed, survival-driven organ. So when we build "thinking" machines, and we ignore neuroscience, we build flawed logic without the adaptive messiness that makes us human.

No AI is perfect. Because our understanding of the brain isn't perfect. And unless we build cognitive, emotional, and ethical awareness into AI, we’re just encoding ignorance at scale.

So the next time someone says “AI is unbiased,” ask them: Which brain was it trained on? Because if it were ours… Well, let’s just say we’ve got some debugging to do.

For those curious, here’s a bit of science that shaped this piece:

🔦 Researcher Spotlight 🔦

We are excited to feature Hemashree Yogesha, an AI engineer by profession and a computational neuroscientist by passion. With a background spanning brain science, machine learning, and ethical AI, she has worked at the intersection of neuroscience and technology across both academic and applied domains.

Her work includes building reaction time apps, mental health tools, and brain tumor detection models, while also exploring spinal-brain neural dynamics during her time as a guest researcher at the Max Planck Institute for Human Cognitive and Brain Sciences. Currently, she is focused on embedding neuroscience into AI systems to make them more ethical, especially in high-stakes areas like sexual assault prevention. Outside of tech, Hemashree is a Bharatanatyam artiste, vocalist, and theatre enthusiast, bringing creativity and empathy into every dimension of her work.

🌟 Neurotech Pulse Special Edition: Call for Contributions!

Calling all researchers and writers! Want to showcase your work in our upcoming Neurotech Insights edition? Reach over 5,000+ neurotech enthusiasts by sharing your innovative research. Submit your details and work to saman.nawaz@nexstem.ai

❤️ Join us on Discord.

Thanks for reading Neurotech Pulse! Subscribe for free to receive new posts.